This coming Monday, July 21, kicks off the fourth annual TrustCon, hosted in San Francisco by the Trust and Safety Professional Association. If you’re going, I hope this explainer will spark some hallway discussions, and encourage you to rethink your relationship with the community you work so hard to protect.

I also hope that my last boss at Google, Royal Hansen, will be happy to see me finally make a legitimate Plato reference.

“An imperfect democracy is a misfortune for its people, but an imperfect authoritarian regime is an abomination.”

What Is Guardianship?

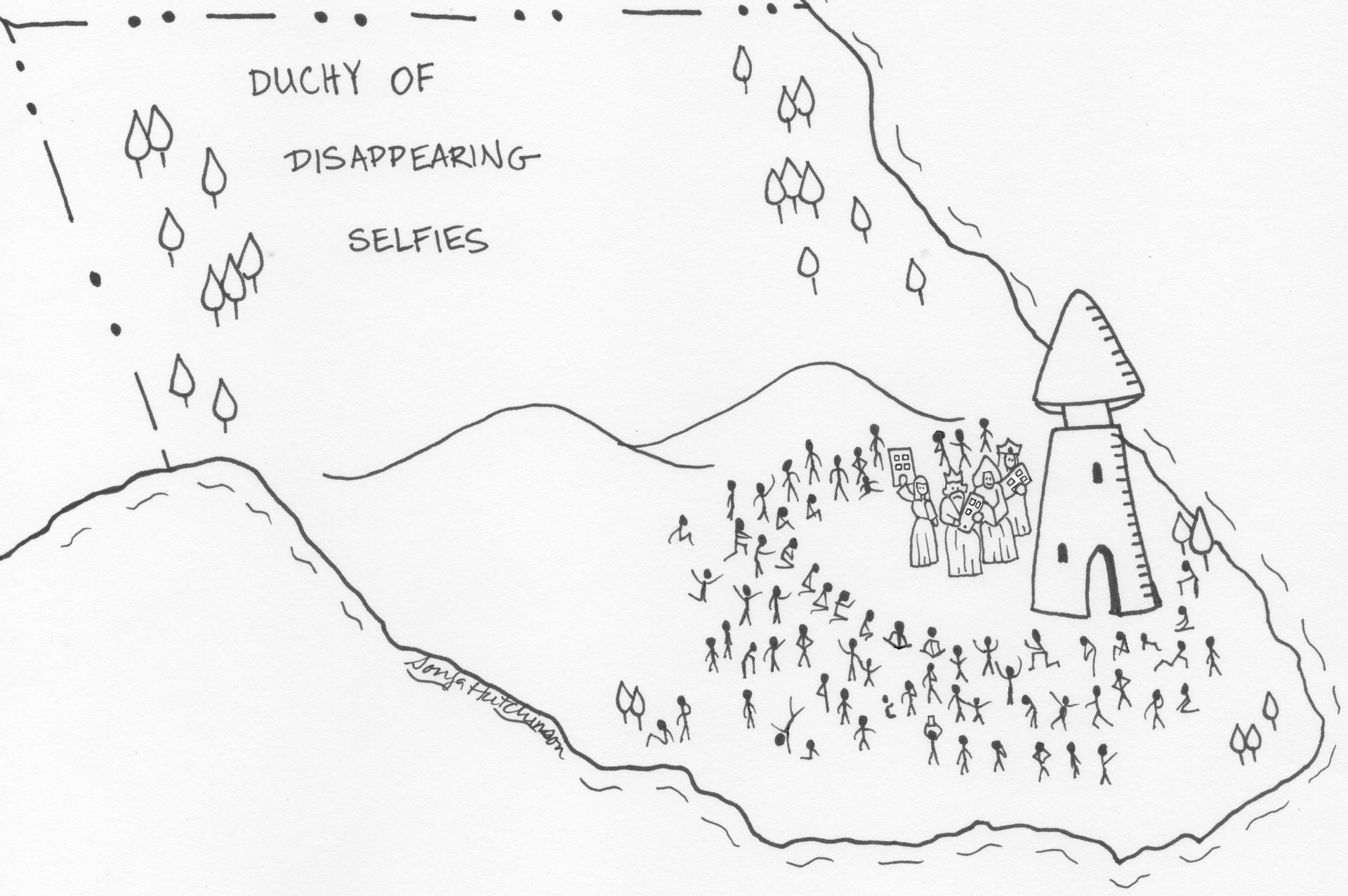

Guardianship argues that societies will be most successful if they grant absolute authority to a small minority of people who are identified and trained as moral and technical leaders. This is better than democracy, because most people cannot understand what is in the best interest of society, and so are not qualified to govern.

This isn’t as radical as it sounds at first, since most people already agree that one major group of people should be excluded from government — children. In other words, to paraphrase a disreputable old joke, we've already established that you want to restrict the vote. Now we're just haggling over where to draw the line.

Who came up with the term?

Plato proposed guardianship in the Republic (375 BC), giving us the term “philosopher-king.” Vladimir Lenin echoed this in What Is To Be Done? (1902), arguing that a revolutionary “vanguard,” who understood history and economics, was needed to guide the the transition from capitalism to communism before the working class would be ready to lead on its own.

Guardianship is also discussed thoroughly in chapters 4 and 5 of “Democracy And Its Critics” (1989) by the eminent political scientist Robert Dahl (1915-2014), a major theorist and defender of democracy.

What does Plato have to do with Internet platforms?

Virtually every tech company’s trust & safety approach is rooted deeply in the idea of guardianship. For example, in 2024 Evan Spiegel of Snap told Bloomberg “We really want to take responsibility for the experience that we’re providing to our community.” He did not say “we really want to have our community vote for Snap’s Global Head Of Platform Safety.”

The current holder of that title, Jacqueline Beauchere, seems like a great person, but her role is a form of guardianship because she can only be replaced by Snap executives, not the Snapchat community. Snap does solicit feedback with a Safety Advisory Board and a Council For Digital Well-Being, but as far as I can tell, they only meet a few times a year and don’t have any decision-making authority.

Snap did come out in support of the proposed Kids Online Safety Act in the US, which sounds like a willingness to cede guardianship authority to government. Consider, however, that the decision to support the bill was made by corporate officials, not by the Snapchat community.

Why is guardianship bad for online communities?

Per Dahl, guardianship relies on three flawed assumptions. These cause special kinds of trouble online.

Only guardians can understand and protect the “public good” of a community, versus each person only considering their own interests. Online, the public good is conflated with corporate goals like growth and revenue, which becomes a way to excuse millions of bad individual experiences, as long as the top-line numbers look OK. [See Facebook CTO Andrew “Boz” Bosworth’s infamous “The Ugly” memo.]

Guardians have superior knowledge of the art of governing, which makes all of their decisions better than the community’s. Online, this puts unfair pressure on trust & safety employees to account for all the uncertainty, risk, and trade-offs of a decision themselves, when it would be better to admit there is no correct answer, and turn the problem over to a democratic process.

Guardians have the inherent virtue to always seek the broadest general good, so there is no need for democratic checks and balances. Online, when career progression or corporate survival is at stake, and there’s no direct accountability to the community, is it any surprise that companies repeatedly make decisions that favor their bottom line over safety?

Can you give me an example?

Tech executives love to say they are “doing all they can” to make their platforms safe. Don’t be so sure.

Let’s take a closer look at Snapchat. The Information reported that Snap’s Trust & Safety team’s budget was $164 million in 2022, up from $131 million in 2021. Sounds like a lot, right? Consider that just last month, Evan Spiegel told the Augmented World Expo that the company has spent $3 billion since 2014 developing augmented reality glasses.

How many Snapchat users (and their parents) are happy that Spiegel diverted billions of dollars of revenue into an unproven new business line, instead of moving faster to make his existing product safer?

To be fair, Meta has spent an order of magnitude more, $46 billion, on virtual reality tech just since 2021. That could have bought a lot of safety improvements for Facebook, Instagram, and Whatsapp.

Whatever you want to call this kind of decision, it sure ain’t philosopher kingship.

How Can We Start To Change This?

Support efforts to move safety decision-making away from the unelected guardians at platforms. For example, the EU’s Digital Services Act gives users the power to appeal platform decisions to designated out-of-court dispute settlement bodies (ODS). It is still early days, but the EU lists six certified bodies, and over 1,300 cases have been submitted so far.

Note that we need to demand changes in safety process and authority, not just better safety outcomes. The problem is not that the guardians employed by each platform are making bad safety decisions. The problem is that they, and not we, have the power to make those decisions at all.

The rights and interests of every or any person are only secure from being disregarded when the person interested is himself able, and habitually disposed, to stand up for them…. Human beings are only secure from evil at the hands of others in proportion as they have the power of being, and are, self-protecting.

Ideas? Feedback? Criticism? I want to hear it, because I am sure that I am going to get a lot of things wrong along the way. I will share what I learn with the community as we go. Reach out any time at [email protected].